Why You Need Standardized Data Today

Digital transformation (DX) often begins with data standardization, or the process of converting all various data sets into one consistent format. It’s not exciting but it’s true. Want to have comprehensive insight into your organization? Standardize your data. Think it would be innovative to project hologram digital twins of your machinery working in real time for any location? Standardize your data. Need to reduce costs across supply chain and maintenance cycles without time-consuming audits? You get the idea.

Information is power, but that power can be illusionary without proper context. For example, say you’re overseeing the global rollout of a new car. This car sells 5 million units within the first 12 months. Sounds fantastic, right? Well, what if every other active car, including older models, in your organization sold 7 million units over the same period? Also, what if 93% of those 5 million units came from only one region? Oh, and 54% of those were returned and refunded within the first three months after the sale. Context is everything, and clear context is only possible when the data is ready, available, and reliable – in other words – standardized.

Understanding Data Standardization

For those new to data management initiatives, data standardization is simply the unification of information within an organization to the same format or caliber. It can apply to file types (like always using Excel), naming practices (for efficient information lookup), program usage (like always using Teams for remote meetings) or other areas of workflow.

Approaching data standardization does not need to be complex. It can occur in a variety of platforms, including common solutions like Microsoft Excel. The important first step is having a clear picture of all data sources within an organization. Partial standardization will still help, but will be less effective if information silos still remain unformatted. At the end of the day it is all about uniformity, and this can be done with the aid of numerous software solutions, or by hand.

Data standardization is not a difficult process, but it can be time-consuming. While mistakes always matter, the simplest error can render hours of manual data entry irrelevant. Let’s go to healthcare for this example: regulations dictate that healthcare providers keep meticulous records of their patients, including a large quantity of personal identifying information. A mundane bit of data like an address can wreak havoc without data optimization. Should street be abbreviated “St.” or “St” or fully spelled out? Inconsistent labelling can easily lead to duplicates or omissions in the overall data, especially if there is no cleansing tool for oversight.

If an executive wants to see which of their locations sees the most foot traffic, and one location has duplicated patient numbers thanks to data inconsistencies, they might make a decision with entirely wrong information. As disastrous as this sounds, keep in mind it’s only one way that non-standardized data can hold your company back. Another meaningful barrier arises when considering digital transformation data.

For all this talk of machine learning and artificial intelligence (AI), it is crucial to remember that most computers are, at the end of the day, incapable of thought. Even automating data entry isn’t guaranteed to produce standardized data. If the source data is inconsistent, most programs – unless specifically programmed otherwise – won't detect a problem, let alone correct it. Digital transformation depends on having consistent, accessible information. Simply put, if your company can’t reliably produce readable data, you have no hope of utilizing its full potential.

The Benefits of Data Standardization

As with any aspect of DX, adopting new technology or new technology-related processes should never be done simply for the sake of "trying something new.” DX can live or die based on an understanding of the business advantages it provides and knowing ahead of time what to expect and what can be reasonably accomplished.

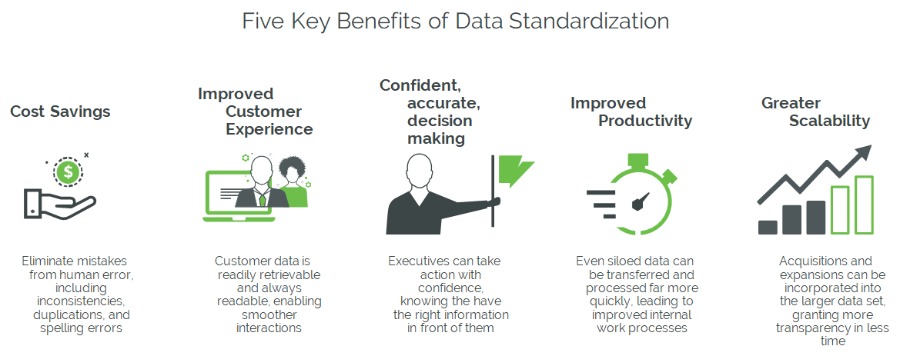

Data standardization is not as overtly ambitious as implementing comprehensive product lifecycle management (PLM) solutions or retooling business architecture from an on-premise solution to Software as a Service (SaaS) platform, but that doesn’t mean it can’t deliver real results. In addition to laying the groundwork for the aforementioned complexities to function smoothly, data standardization yields numerous benefits by itself.

To begin with, there are the cost savings implications. Every time a mistake is made, it costs something. Usually, the longer the mistake goes without being corrected, the higher that cost becomes. By eliminating the potential for incorrect data entry, this greatly reduces the odds of a small mistake having a big negative impact on the bottom line. This on top of the productivity benefits to having just one standard for data input. Even if a department is siloed and information needs to be copied over, the process will still go much quicker if all the data looks the same and fits into one template.

There is also the benefit of confidence. No one, even the most intuitive leader, can be expected to understand every bit of information traveling through their organization at all times. By making this data easier to read, it becomes easier to act on. Rather than meeting after meeting to try and understand the whole picture, it’s easily read, understood, and then action can be taken. This allows the organization to move quicker and more decisively, gaining a competitive edge over those that stagnate as they wrestle with understanding which direction to follow.

How Standardized Data Empowers Digital Twin

Diving in a little deeper, let’s explore standardized data as it specifically relates to digital twin. Digital twins are virtual representations of physical products, people, processes, and spatial. Many advanced digital transformation solutions and products rely on digital twins as a fundamental aspect of data presentation and utilization.

With a well-designed digital twin, organizations have access to benefits that are, frankly, impossible without the technology. Predictive maintenance, operational intelligence, and product design optimization are all possible with digital twin. That said, digital twins are not always the easiest form of technology to implement and many organizations struggle to take the concept out of the pilot stage and into a company-wide solution.

Data sourcing is frequently mentioned as a core challenge to digital twin utilization, and this directly ties back into data standardization. The best digital twins pull information from a variety of sources (such as through IoT and sensors), but if each dataset is unique in how its structured, that twin will have a very difficult time condensing the data into a cohesive whole. If data cannot be sourced, the digital twin will not have the ability to fully articulate the product, process, person or spatial environment it is trying to capture. Digital twins are only as powerful as the information powering them, and data standardization will greatly help increase their potential.

Is Your Data Standardized Today?

Really the question of data standardization is a simple one. Are you confident that data within your organization can be easily exchanged and read through multiple systems without causing confusion? Do you believe that departments within your business can share information quickly without creating duplicates or omissions? What about between different locations?

If answers to these questions are difficult to come by, it’s a sign that your company’s data standardization policies may not be as crystallized as they need to be. DX initiatives and improvements are well underway at this stage, regardless of industry. Businesses that cannot even guarantee data consistency will be at a severe disadvantage against competition with concrete procedures and programs in place to comprehensively manage and label data. It may not be the most exhilarating investment, but sowing data standardization strategies will reap large rewards in the future.